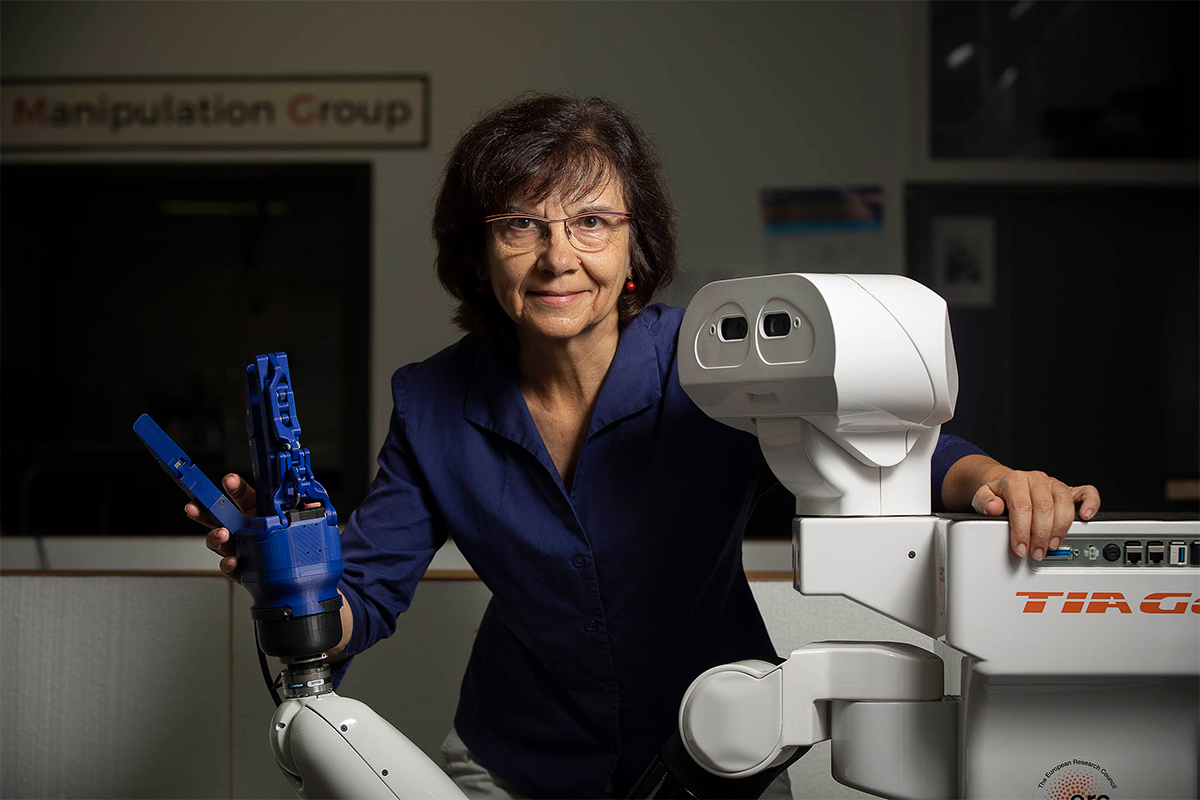

Technology is addictive. Carme Torras Genís (Barcelona, 1956) remarked this in one of her lectures, in which she usually warns of the need for an ethical perspective in robot design. She is a research professor at the Institute of Robotics and Industrial Informatics (a joint centre of the Spanish National Research Council and the Polytechnic University of Catalonia), and currently heads a research team working on assistive robots to help people with reduced mobility or cognitive impairment to carry out everyday tasks such as dressing or feeding themselves.

A mathematician by training, she completed a master’s degree in computer science at the University of Massachusetts in the United States. Upon her return, she obtained a PhD in Computer Science at the Polytechnic University of Catalonia and consolidated a research career for which she has received numerous distinctions, ranging from the Rafael Campalans Prize of the Institute of Catalan Studies, the Narcís Monturiol Medal of the Regional Government of Catalonia for scientific and technological merit, and the Julio Peláez Prize for pioneering women in science, to more recent awards, such as the Julio Rey Pastor National Research Prize in the area of mathematics and ICTs, and the National Research Prize of Catalonia.

Carme Torras also cultivates literature. For her, science and literature are not two separate worlds. On the contrary, they are her passion and devotion. She has never understood the distinction and even went so far as to enrol in Philosophy and Literature at the Spanish National Distance Learning University (UNED), where she studied for several years. Literature, she says, allows her to reflect on the ethical challenges that surround the development of science and technology. She has published several novels in Catalan, such as Pedres de toc (Columna, 2003), Miracles perversos (Pagès Editors, 2012), Enxarxats (Males Herbes, 2017) and, translated into Spanish, La mutación sentimental (Milenio, 2012). She has also participated in numerous short story compilations, such as Científics lletraferits (Mètode, 2014) and, most recently, Barcelona 2059 (Mai Més, 2021) and Somia Philip Marlow en xais elèctrics? (Alrevés, 2012).

The interview was conducted online and from three different cities: Valencia and Barcelona in Spain and Leicester (United Kingdom). The pandemic has helped to normalise virtual encounters. Once, they were the exception, but now they have become the norm.

We live in an increasingly high-tech society, but this pandemic has shown us, among other things, that care for our elderly still has a lot of room for improvement beyond merely technological aspects. What should the role of robotics be in this context?

In our laboratory, we try to make robots that help people with minor mobility restrictions to feed and dress themselves so that they can continue to be autonomous. During the pandemic, we were also able to provide cognitive training for people with mild mental disabilities, such as Alzheimer’s, together with the ACE Foundation, which treats this disease. After a few months, they opened the centre and we brought a robot that uses the same game board that the therapists use to diagnose and monitor the disease. For example, you have to put numbers in increasing or decreasing order. Depending on the disability, they can be from single digits to three digits, it can get complicated… And the robot provides the same help that a therapist would give. That is to say, if it notices that the person does not succeed, it says «you can find it on this line», «it is red», there is even a level of help in which it picks up the piece and places it. It also encourages them: «very good», «you can do it»…. We saw that, before placing the piece, when some people hesitated, they looked at the therapist and if they nodded, they placed it, and if they shook their head, they reconsidered. Well, the robot does the same thing, if it is right, it will confirm it. Otherwise, it closes its eyes.

And would this replace the figure of the therapist or is the goal to train patients autonomously?

In some centres there is only one therapist for twenty patients, so having this option…. You do not need much, just an arm and a head is enough… And besides, the system can record the evolution of the patient over a period of months. The robot’s capacity to store data is greater than that of the therapist.

And is the fact that older people are commonly less trained in technology an obstacle or is the robot able to overcome it?

We anticipated that they might be a bit scared or not understand what was going on and it turned out to be the other way around. They loved it, and almost all of them were over seventy! It is also possible that, because they went through the pandemic for so long without much stimulation, it was like a breath of fresh air for them.

How expensive are these robots, and can they be implemented?

The one we have used, we already had. It is a mobile robot, with wheels, an arm, a camera, a series of sensors… and, yes, this one costs 50,000 euros. But it would not take that much, as I said. And think that these robots would not be used by one person. They would be used by many patients. With other applications we are exploring, such as feeding, there are already small devices that are like a sort of articulated lamp, and these cost as little as 7,000 euros. What we do is fit them with a camera that «sees» when the patient opens their mouth – which means they want to eat – and then the arm feeds them. This application is a win-win. For caregivers, because at mealtimes, it can get a bit busy, and also for people who do not have arm mobility, because they can control the machine themselves. I think this application will soon be widespread because it gives the person significant autonomy.

In any case, this interaction between robots and humans generates many expectations, but also fears that they will take away more and more of our autonomy as individuals.

Yes, of course, but this is also the case with machines in general. Nobody remembers telephone numbers any more, for example. Technification means that there are capabilities that are now done by machines. My idea is that we use machines for the tasks in which they are more capable and faster, and reserve for ourselves those that require human capacities such as creativity, empathy, life experience, etc. I see us moving towards symbiosis, rather than substitution. I do not believe in the singularity and the idea that machines will eventually dominate the world. I believe in the combined capabilities of people and machines.

In this utopia of machines taking over the most repetitive tasks, we have to take into account that, in the labour system, there are people who do these jobs. How do we make it so that everyone can access more creative work? How do we solve this from an educational point of view?

I like to think of them not as jobs but as specific tasks, because robotics is becoming more and more collaborative: the machine does the heavier tasks and the human supervises or does the fine control tasks. This is the trend in industrial robotics at the moment. However, this collaboration with the machine requires higher qualification. Therefore, it is true that there are jobs that will eventually disappear. But there are jobs not classified as high-level that will not disappear, because there is always a part of empathy needed, for example, in human care.

In the UK, as Brexit has made it more difficult for immigrants, the country has stagnated. For example, some supermarkets have a shortage of products because the storehouses are out of stock, there are no truck drivers… In this machine utopia, this can also happen.

In this sense, we have a project with a company to carry out this type of task, and also to box online purchases. This will end up being done by robots, because it is just taking products from here and there and packing them to be sent. Many of these tasks will end up being mechanised.

This division of tasks has been one of the arguments of entrepreneur Elon Musk when he presented his humanoid robot project a few months ago. How does the scientific community welcome these announcements?

There is very little information on what this robot will be like. He seems to have used all the sensing aspects of self-driving cars in the robot, but I have not found much information on the details. Also, I am not a big believer in these humanoids. I am more of a big believer in domestic robots, which do certain tasks and do not need to be more anthropomorphic than necessary. It has no need for legs if it has to move on a smooth surface, it has no need for a very expressive face if it will only help you get dressed… They are not the robots from the movies, they are just another tool.

So robotics has more to do with household appliances than with androids? Is it more about function than form?

For me, it is, yes, because, besides, if they are given a very human form, they can deceive children or the elderly. Children’s empathy is still developing, and if they relate so much to a machine, they may miss the window of learning. An elderly person with no human caregiver might have problems of isolation.

Perhaps the limits are clearer in assistive robotics, but what happens in the case of military robotics?

That is the main danger. All these autonomous weapons of destruction like drones, which can target a certain type of person, I find horrifying. And it is not just me, mind you, the entire artificial intelligence and robotics community is very concerned about it. There is a campaign, which can be consulted on the website «Stop killer robots», which many people have co-signed. The scientific community is almost unanimous, except, of course, those working in military research, in agencies like DARPA.

Playing devil’s advocate, doesn’t military research also advance civilian research? Can it have a positive aspect in this sense?

Yes, of course, and vice versa. For example, many of the things that are developed in machine vision are not done for military applications, but are then used in military applications. Research always has this, that you can use it one way or the other. But I do see the big threat. In this sense, the series Black Mirror exemplifies it very well. And not just in the military. There is an episode, «Hated in the nation», in which they send [robotic] bees to attack certain people. I find it brutal.

And apart from these manifestos, which are necessary, what can be done?

It has proved to be a very complicated issue. Some organisations have produced guidelines on ethical robot development. For example, for two or three years, the Institute of Electrical and Electronics Engineers (IEEE) organised various ethics committees specific to each environment – education, health, government – and open to contributions, because it is not the same to legislate in Japan or in Europe. Then they found that governments accepted many aspects [of this initiative], but they made them reduce and remove the section on military robotics because there was no way to agree there. So now they have edited a minimum of these principles, minus that part. This is very hard to accept because it goes in a different direction. It is all very well to talk about social robotics, artificial intelligence, to set certain standards, but there is no way to touch the military, right?

As for legislating for different cultures, there is a study related to autonomous cars asking who should be saved from being run over in case of an unavoidable accident, and the answers were different, depending on the area. For example, in Asian countries they saved older people more and in Europe we protected children’s lives more. When we get to the ethics of machines, will we have different ethics? Will Asimov’s laws of robotics be different depending on the country where they are applied?

I believe that there are some basic principles that are universally applicable, but others are different depending not only on the country, but also on the circumstances. A robot in the field of education is not the same as a robot in the field of care. It is one thing to establish the basic principles and another to establish the regulations in each case. In fact, the European Union has also drawn up a guidelines document on artificial intelligence, and each country is adapting it. That is to say, it is being particularised.

Regarding automated machine learning, to what extent can human complexity be translated into algorithms?

This is an interesting two-fold issue. There are positive aspects such as, for example, Google Translator, where people propose changes depending on the context and the programme gradually learns from them. On the other hand, there is the case of Microsoft, which implemented a chatbot called Tay on Twitter to learn from what people were saying on that social network, and in just sixteen hours they had to terminate it because it ended up being a Nazi, sexist, offensive chatbot. That’s terrible! It makes you think that we are at the mercy of people who are sometimes not very aware of the importance of their contributions to the Internet. One of my obsessions when I teach technology ethics is to make people aware, because sometimes these things are done unconsciously, with all sorts of biases that are introduced [in programming], either because of ignorance or because the data used by these programs learn is not properly chosen.

Speaking of Twitter and social networks, what role could algorithms and bots have played in the disinformation about the pandemic?

I do not think the bots are to blame. The origin is always human. With all this pseudoscience nonsense, there are always groups that sponsor it. The Internet globalises and reinforces it. Now there are people working on artificial intelligence to detect fake news, to combat it; they are good hackers, white hat hackers.

One debate during the pandemic has been whether to censor certain profiles that spread misinformation. If we give power to some algorithm or system that allows us to clean up social media, will we not end up silencing more than we should?

That is why we always say that it is important to educate, starting in primary school. In other words, we used to study other kinds of things – civic values, etc. – which is perfectly ok, but now students should also be encouraged to think critically from an early age: they should not believe everything they read, they should look for information and have the ability to distinguish correct information from in correct information… I think that is feasible.

If you want to be critical, you need to know how the thought process works. This is one of the problems of our education systems, which separate science from literature.

It is appalling. At the Polytechnic University of Catalonia, which is where I work, there are no humanities programmes, of course. And now they have set up an agreement with the Open University of Catalonia so that engineering students can take some subjects and have them counted as credits, because they wanted to introduce humanities content in their degrees, but had no way of doing it. This was a very good initiative. This complementarity is needed, but the infrastructures are not geared towards this.

In fact, your novel La mutación sentimental (“The Sentimental Mutation”) is also used as teaching material to reflect on ethics.

In the United States, industrial engineering and computer science courses have been taught for years. They used to teach them with philosophical texts that were a bit abstract for technology students. A few years ago, some teachers started to use novels by Isaac Asimov, Ray Bradbury, and Philip K. Dick to motivate students better. At the end of my speeches, I always talk a little about the importance of ethics and quote the novel. At a conference, there was a person from MIT Press in the audience and she said to me: «If we translate this novel, would you make some materials for this subject?» I was thrilled, of course, and I made those materials. And they have been used a lot, I know it. You have to buy the novel, but the auxiliary are free for educators. Here, Pagès Editores did something similar, adapting the materials for secondary school. And they are also free.

Reflecting on ethics, restoring or improving our capacities by means of technological implants is a reality in several areas. Where are the limits?

The limits? For the time being, the precautionary principle is being applied. We do not perform an intervention if we do not know what the implications are, even though there are always those who will ignore this principle. I think that it is impossible to set limits to technological development. In other words, everything that can be done will be done. That is why I like to counter it with training. Some people say «it is fantastic for alleviating disabilities». But, for example, [Oscar] Pistorius runs more [with prostheses] than those without disabilities. In a story I wrote I discussed the duality between technology outside or inside the body. Of course, I am dedicated to robots, so that they can help us from outside, but then there are all the transhumanists, who think that we will get chips for everything: to increase our memory, for immediate translation of any language… We already have cochlear implants, which are very good for people who are deaf, but the border between alleviating a disability and improving the body is always very easy to cross.

So, is the future more about robotics outside our body? What are the main difficulties you see in mixing biology and machine? Is it possible the way we see it in science fiction movies?

As far as the body is concerned, it has already been achieved, because there are already mechanical hands activated by the nervous system. It is a very delicate surgical procedure, but it has been achieved. What I do not feel comfortable with is touching the brain. There are very positive applications such as, for example, implants for people with Parkinson’s disease, which allow them to unblock themselves. I find this a marvellous thing. The gain is such that, should they have any inconvenience, it would be justified. But to go further… in the novels I propose things like that, but, of course, it is not the same.