Everything they know about us

Is it possible to browse privately in the Big Data ocean?

«The use of computers in the investigation of citizens’ financial, medical, mental, and moral status is a major- and growth-industry» industry. The statement seems taken from one of the texts that have drawn attention to the business of collecting and processing personal data for some time. But it was written many years ago. It is from the book Electronic nightmare. The new communications and freedom, published in 1981 by John Wicklein (Wicklein, 1981, p. 191), a prominent journalist and communications expert who had previously worked as editor and publisher for The New York Times, and who had been responsible for news broadcasts on several television networks. The quote is meant to demonstrate that the problem of computers and telecommunications threatening privacy is not new. But while in 1981 it concerned only well-informed people like Wicklein – and many governments – in recent years the power of computer tools and the ease with which data can be transmitted have given it a new dimension and the concern has spread.

In the work, Wicklein not only makes a very rigorous and informed presentation of the beginnings of interactive television, videotext, or electronic journals, debating legal, economic, political, and social aspects, but also details a hypothetical situation in an extraordinarily lucid way (Wicklein, 1981, p. 28–30). He situates it in «1994, when two-way, interactive cable has spread to hundreds of cities across the country» – he actually fell short in that. He describes a fictional Martha Johnson, a candidate trying to oust the mayor of the no less fictional city of San Serra, in southern California. Using an interactive television, Johnson commissions a book that advocates the abolition of laws that obstruct consensual sexual activity among adults. She also buys a deodorant spray. When she leaves, her husband uses the same device to respond to a survey, in which he opposes allowing lesbians to teach in public schools. Then he purchases a pornographic film. He also makes a bank transfer, because he has been notified that his wife’s book cannot be sent because they have late payments.

«Much more information circulates every day today than at any other time in history»

The cable company has been gathering information. And they have people interested in it. Martha Johnson’s opponent, the mayor, learns that her husband has responded to a survey in which he expresses an opinion contrary to what she stands for, and that he has purchased a pornographic film. He knows it was him because the monitor installed in front of the Johnson house informed him that the woman had left and not returned. Another client is the publisher of a porn magazine, who sends Mr. Johnson a copy in a discreet envelope. An environmental group now knows that the candidate buys spray deodorants that destroy the ozone layer. And a credit rating company learns that Johnson’s purchase was refused, a piece of information that they will include in their marriage file.

In 1981, the hypothesis might seem exaggerated. Maybe in 1994 as well, though not so much. But in 2018 we can see that, despite being a visionary, Wicklein even fell short.

How much information circulates on the web and what can we do about it?

We do not know how much information was circulating in the world in 1981, but only a small fraction must have travelled in electronic format. We do have an approximate idea of how much information there was worldwide in 1997 thanks to the computer scientist Michael Lesk. He calculated that there were 12,000 petabytes (PB) of information in total (Lesk, 1997) – 12 million gigabytes, to make it a little more understandable or less incomprehensible. Today, 500 PB of data, the equivalent to 6,600 years of high-definition video, are transmitted every hour. This means that the 12,000 PB Lesk calculated are transmitted every day. Formats are very diverse. About 200 million emails are sent and half a million comments are posted on Facebook every minute. More than seventy million photos are published on Instagram every day. Add to that Internet queries, mobile phone messages and calls, tweets, other social networks, images recorded by surveillance cameras, private cameras, satellite images, banking transactions, clinical data… Whether the petabyte calculation is approximate or not might not even be worth investigating. The simple conclusion is that much more information circulates every day today than at any other time in history.

«The problem of computers and telecommunications threatening privacy is not new»

What can we do with so much information? Very positive things. And also very negative things that require strong control measures. This large amount of data and data processing is called big data. Big data includes not only the actual data, but also the IT tools to process it. One is useless without the other.

There is no single and precise definition of big data. What we should consider big data and what is simply a huge amount of data is not clear. In any case, big data does have certain characteristics. The three Vs of big data are volume, variety, and velocity. The first condition is clearly met, as we have seen. The second one is also fulfilled thanks to current production and data transmission technologies. And we would argue that there is no need to go into the third condition in order to justify it. Two more Vs are often added: veracity and value. Data must be reliable, which is not always the case. And also valuable, which is easier to prove.

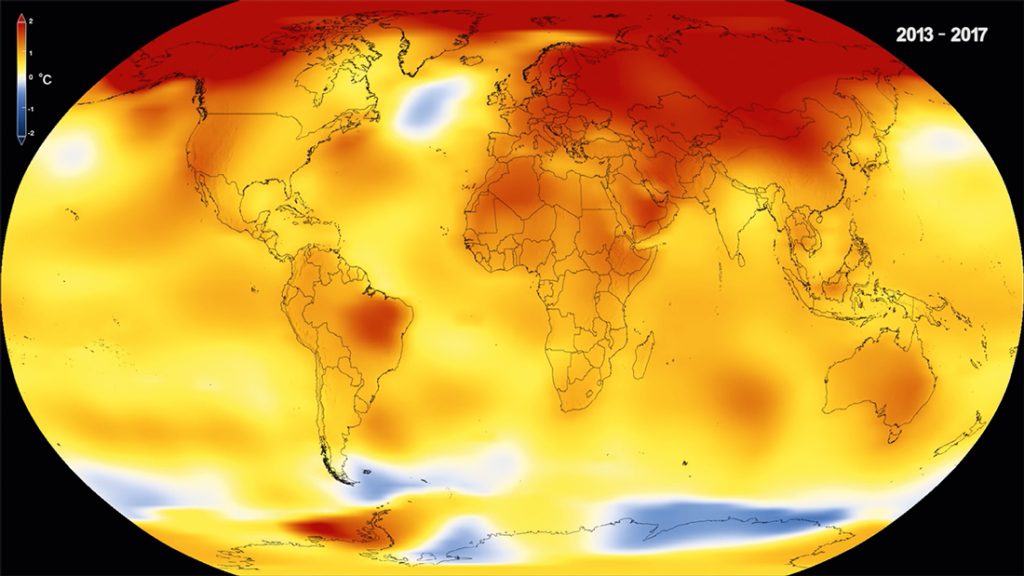

Thus, big data is of great importance in a wide range of fields. Any field requiring a lot of data and processing can benefit from big data techniques. Powerful computers allow to increase calculation speed and complex algorithms transform data sets into useful information. Data can be very scientific – interactions between particles in an accelerator, astrophysical positions of stars and galaxies… – or very ordinary – the traffic management or cleaning services of a city. All areas of research can benefit from big data. A study on the genetic mutations that are present in a certain type of cancer and do not appear in healthy patients, associated with lifestyles and other personal characteristics, could not be carried out without big data and a supercomputer. Climate studies would be much less useful if we could not rapidly process huge amounts of data.

The data we provide unknowingly

But data has value in many other areas, and who is using it, how, and what for is not always public knowledge. If Wicklein was able to imagine that much damage to the Johnsons with still limited and not very widespread technology, imagine what can be known about us today.

The information we provide happily and voluntarily can supply extensive information about us. Most people search the Internet using search engines that collect what we were looking for and which websites we visited – with their so-called cookies – we resend tweets that can be seen by millions of people and that can be registered, we post comments on Facebook or photos on Instragram. Not everyone does all that, or not very often, but in any case we provide data to receive information, to comment on things, or for pure fashion or narcissism. One invaluable piece of advice is to be discreet and cautious. However, as we shall see below, that is not enough. Cambridge Analytics’ use of the 87 million Facebook user profiles is a good example that we do not know where our information will end up.

In 2015, some researchers wanted to create an algorithm that could assess our personality using the trail we leave on Facebook (Youyou, Kosinski, & Stilwell, 2015). They compared it to questionnaires filled out by peers, friends, partners, and family members. And they saw that, based on just ten «Likes», the algorithm knew the user as well as a coworker. After 70 «Likes», it was as accurate as a roommate. At 150, it knew as much about them as a parent or a brother. And with 300, it was as accurate as their partner could be. Just by using a small part of the trail that we leave on the Internet, one can elaborate a sufficiently approximate profile.

«Any field requiring a lot of data and processing can benefit from big data techniques»

This may seem less important when compared to a prior study (Kosinski, Stilwell, & Graepei, 2013) where the ability of these algorithms to guess other things was demonstrated. Elaborated with more than 58,000 volunteers, it showed that the algorithm was capable of predicting, in 88% of the cases, if the users were heterosexual or homosexual, in 95% if they were African-American or Caucasian, in 85% if they were Republicans or Democrats, in 82% if they were Christians or Muslims. They even felt capable of guessing if they were intelligent in 78% of the cases and if they were emotionally unstable in 68%.

When alerted about privacy threats, many people argue that receiving advertising or commercial proposals is not a serious problem. They say you can just stop paying attention. While possible, it can be a bit cumbersome. But with massive data it is no longer about that, but about far more sensitive information, which everyone has the right to keep secret if they so wish, such as ideology, religion, or sexual preferences. There are countries where homosexuality is a crime and can even be punished with a death sentence. For an algorithm to be able to predict whether a person is homosexual is neither innocent nor harmless, and the same goes for religion, political opinions, or social activism.

Information for insurance companies, corporations…

Many of the things that can be deduced from our data do not affect just one group, like homosexuals. We all generate medical information in one way or another. We are not talking about all that is written on our medical records, which can be stolen by cybercriminals. There are other possibilities. If we do a search on a particular disease, Google knows we did. If we buy a book about a certain disease, Amazon knows we did. When we speak out about a particular disorder or are looking for people who have it or their relatives, the Internet knows it.

This circulating information might be collected by someone, connected with many other pieces of information about our activities, and lead an algorithm to believe that we have the disease or are concerned that we may in the future. Perhaps we were simply looking for that information out of curiosity or for whatever other reasons. But if the information reaches insurance companies – and it may very easily do so – we may have problems signing up for policies or life insurance. And should it reach companies in general, they may have a lot of information about the possible medical problems of a job candidate. Some companies accumulate medical and non-medical data about millions of people and use it to either accept or deny a request for medical assistance (Allen, 2018). The algorithms they use are not infallible.

An algorithm is a series of ordered instructions that can be used to deal with a specific problem or operation. Some are very simple, like the one that allows us to check the result of a division: quotient times divisor plus the remainder must be equal to the dividend. Others are very complex, with hundreds of lines of computer code. Google’s search engine must have a few thousand million lines of code.

Algorithms do not think. Algorithms follow instructions and assimilate data. But someone had to tell them what data to assimilate. Data can be found online or in archives or publications that reflect society approximately (Duran, 2018, p. 147–165). They are designed and built by people and reproduce their makers’ biases. They learn from the information they are provided or with what an intelligent system collects. They do not understand nuances if they are not trained to take them into account, and so they may reach peculiar conclusions (some examples can be found in O’Neil, 2016/2018).

In addition, many algorithms are not validated. And companies tend to hide them because they consider them to be intellectual property and protect them from competition. As a result, they are black boxes (Pasquale, 2015) in which we are judged by what our data says, not with absolute certainty, but simply with probabilities. They judge our potential medical expense according to the neighbourhood where we live, the studies of our parents or friends and the comments they make. We are not individuals, but processed statistics. And vital decisions are made based on that.

From faces to genes

The potential of big data to deliver positive things is growing every day. Research projects in a wide range of fields are advancing science and public management or making our lives easier. But they also increase the possibilities both of capturing data without us knowing it and of applying them with unclear and unknown intentions. And ignorance leads to helplessness.

«Just by using a small part of the trail that we leave on the Internet, one can elaborate a sufficiently approximate profile»

Thus, face recognition systems are becoming more and more accurate: they can identify people in photos or videos – people who may not want to be identified – and associate the face and name with other information found on the web. Sometimes, they do not even need these systems. Facebook asks users to tag people they know who appear in pictures, so you do not even need to have a Facebook account to be tagged on Facebook photos! Based on voice and speech patterns, some systems can identify whether a person is showing signs of Parkinson’s or Alzheimer’s, even before a medical diagnosis is possible. This is very positive for prevention or early diagnosis, but less so if companies use it to find out whether a worker – or a potential insurance customer – will show those symptoms in the near future.

Genetics adds another dimension to possibilities and risks. This science has come a long way, but in addition to discovering many genes linked to diseases or to the predisposition to suffer from them, it has also revealed their great complexity. Today we need to take into account not only genes, but also epigenetics, i.e., alterations that can affect the expression of genes due to environmental aspects such as food, pollution, or life traumas and that can even be transmitted to one’s offspring.

The access to sensitive user information by interested third parties is a hot topic. In early 2018, the Cambridge Analytica scandal broke when it was discovered that this British consulting firm had used information from 87 million Facebook users to create personalised advertisements – including fake news – in favour of Donald Trump’s victory in the 2016 US election campaign. Cambridge Analytica’s role in other election campaigns immediately came under public scrutiny. The links between the consulting firm and the Vote Leave sector in the United Kingdom’s 2016 referendum on the country’s permanence in the European Union led many citizens to take to the streets to protest what they considered to be a manipulated process. / John Lubbock

Therefore, some genes are determinant, but many others are not. The possibility of giving away genetic information presents a risk depending on whose hands it falls into. But it also affects our family environment. We can prevent our data from being on file, but if a direct relative provides them, they are also offering a part of our genetic identity. And now it is not too difficult for anyone to do so. Companies such as 23andMe offer genetic tests to know the predisposition to certain diseases or to search for potential relatives in their huge database.

Conclusions

Since Wilhelm Roentgen discovered X-rays in 1895 and opened the way to radiology, our body has not stopped becoming more and more transparent (Duran, 2016). And big data has made not only our body transparent, but also our actions and thoughts. This has immense potential, but also many risks (Duran, 2018).

The prudence of the users – while essential – is not enough to avoid these dangers. That is why we need regulations to protect their rights, as well as technologists and companies who understand the need to set limits to computer tools. The European General Data Protection Regulation, obligatory in the Union since May 2018, aims to ensure that everyone can choose which information they provide, to whom, and for what purpose. They can even demand to be informed about the criteria considered by an algorithm that will help them to make a decision – for a credit loan, a job, legal action…

These rights are essential. But it is also important to properly study the implications of mathematical models so that we do not draw biased conclusions that affect our present and our future. These powerful tools can make a more just society, as long as we are willing to not only sing their praises but also to admit and balance their limitations. As Joshua Blumenstock (2018), director of the Data-Intensive Development Lab and professor at the University of California, Berkeley, noted in a paper, the use of big data for development offers many possibilities, but it requires a version of data science «that is considerably more humble than the one that has captured the popular imagination». Humility is often necessary for success, because it helps correct or prevent mistakes. Therefore, we will have to include it and enforce it in the algorithm that will lead us to the future data society.