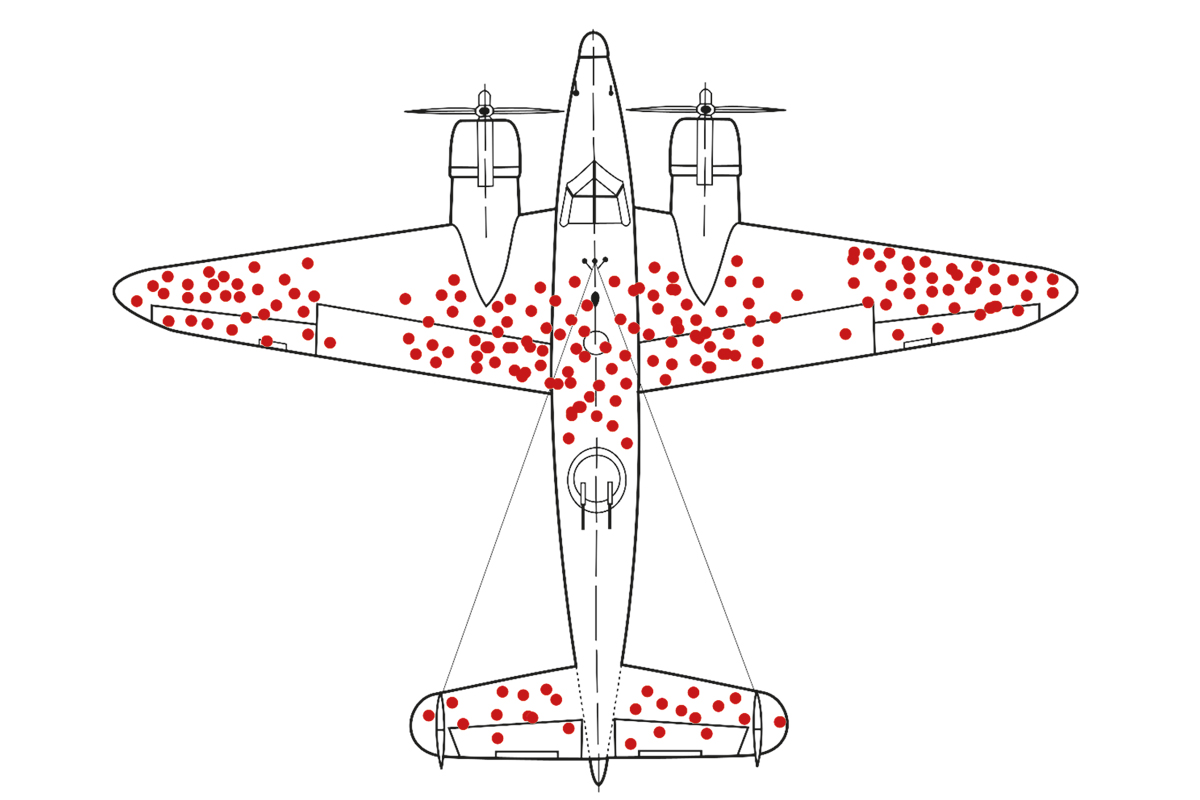

Mathematician Abraham Wald counted himself among the thousands of Jews who were forced to flee Europe in the years preceding the outbreak of World War II. After emigrating to the United States along with his family, Wald was to be hired by the Statistical Research Group at Columbia University, where his work would prove pivotal in the course of the war. Since shielding the entire fuselage of Allied bombers was too heavy a burden, Wald was confronted with the critical task of deciding which parts were most important to protect. Wald examined the distribution of bullet hits on the fuselage of bombers returning from the front and observed that hits accumulated in very specific areas (as can be seen in the picture). The solution seems obvious: to reinforce the areas with the highest number of impacts. In fact, this is the usual reaction I have repeatedly observed among students confronted with this problem. Nothing further from the truth. As Wald realized, bullet hits were actually randomly distributed. Bombers with hits in the areas that appeared intact on his diagrams simply never returned home. Thus, Wald rightly concluded that they should shield precisely the areas that were unscathed in the aircraft that came back, as it was precisely these parts that were critical to their integrity.

This anecdote has become a famous case of what is known as «survivorship bias»: a distortion in our reasoning due to the absence of data on those who have not survived a particular event. Among other errors, survivorship bias invites us to pay close attention to characteristics of successful people even when we have no evidence they have anything to do with their fortune. When the media reported that Bill Gates and Mark Zuckerberg never finished college, a New York Times article warned of an increase in college dropouts. In reality, statistics unambiguously indicate that a college degree increases both the chances of finding a job and its average salary.

Survivorship bias is not an oddity. Our minds are swarming with similar irrationalities. The so-called «confirmation bias» causes us to accept arguments that confirm our beliefs and ignore those that refute them, while the «blind spot bias» prevents us from detecting flaws in our reasoning, even though we are excellent at detecting these same flaws in others; an unfortunate explosive combination that fuels increasing polarisation in the age of social media. Similarly, psychologist Daniel Kahneman’s findings on the psychology of judgement, which earned him the Nobel Prize in Economics in 2002, suggest that irrationality explains an important part of modern economic theory (I cannot recommend his delightful book Thinking fast, thinking slow strongly enough).

Some of these cognitive biases appear to be adaptive, the result of evolution shaping our minds not to be perfectly rational, but to survive and reproduce given the ecological challenges we have faced as a species. In some contexts, this appears to have favoured «irrational» ways of processing information because they may be more effective – for instance, by allowing us to make quicker decisions, or because self-deception is sometimes the best way to get what we want in our social group. Other cognitive biases appear to be simply the result of limitations in our ability to process information. Regardless of their origin, they should teach us some humility and tolerance.